Skimle is AI-powered qualitative data analysis software that turns interviews, reports, and other text documents into structured insights — combining deep professional rigor with business speed.

Unlike chatbots that retrieve random passages or manual tools requiring weeks of coding, Skimle systematically analyzes every document to create a transparent, editable knowledge structure.

Skimle is a tool meant to empower human experts to do more: Academics can upload 10s of interview transcripts to create initial codings with full transparency. Public administration analysts can summarise 100s of consultation feedback documents to help inform decision making. Market and customer researchers can analyse 1000s of feedbacks and interviews to identify opportunities. Consultants can quickly summarise client interviews, data room contents, expert calls and other data to spot themes. Legal professionals can get on top of large volumes of data to find the insights that matter.

Skimle is used by researchers at 20+ universities, policy analysts in EU governments, consultants at boutique firms and market research professionals - all who need both speed and a solid, defensible methodology.

Why qualitative data analysis is broken (and how to fix it!)

Most knowledge workers face the same challenge when analyzing qualitative data: how do I get systematic insights out of all the data I have collected?

Manual analysis is rigorous but impossibly slow:

- Manual thematic analysis of 30 interviews takes a week, even if following a practical approach

- Summarising 500+ consultation responses typically takes months of analyst time

- Analyzing 200 customer feedback surveys responses takes ages, which delays insights or is simply skipped

Previous generation tools like NVivo and MAXQDA aren't really helpful:

- Require weeks to learn - there are even dedicated university courses on this!

- Cost €800-1,600/year licenses

- Are still fundamentally manual (you highlight every paragraph yourself)

- Projects still take weeks or months to complete

Basic AI tools like ChatGPT or simple RAG systems are fast but unreliable:

- Lucrative promise: "Upload documents, ask questions, get summaries"

- But answers change every time you ask, risk of hallucinations

- No way to verify if you got comprehensive coverage or the AI skipped text

- Black-box results you can't defend to stakeholders

As a result, knowledge workers had three choices when it comes to qualitative data:

- Slow and rigorous (traditional qualitative analysis software)

- Fast and superficial (ChatGPT-style document chat - with all the risks and downsides)

- Or... just skim the documents and trust your gut feel (unfortunately time pressure forces this)

It's no wonder qualitative research is often seen as a second-class citizen versus quantitative analysis. Tools like Excel, Tableau, SPSS, R, Stata and beyond enable fast, rigorous and accessible analysis of large sets of numerical data. They produce replicable findings and allow to explore the datasets with ease. Nothing like this was available for qualitative analysis, which felt wrong given the opportunities of discovering true insights from text data.

After working 20 years in academia (Henri) and 20 years in consulting (Olli) we saw that a properly done AI solution could be a game changer. We created an AI-assited workflow that automates the rigorous approach step by step with full transparency. This means that you no longer have to make the choice: Skimle delivers academic-grade rigour with the speed of AI.

- Systematic analysis of every document (like an academic researcher or subject matter expert would do)

- Full traceability from insights to source quotes (no black-box AI outputs)

- Editable "spread sheet" like structure you control ("Excel for text")

- Results in hours, not weeks

How Skimle works

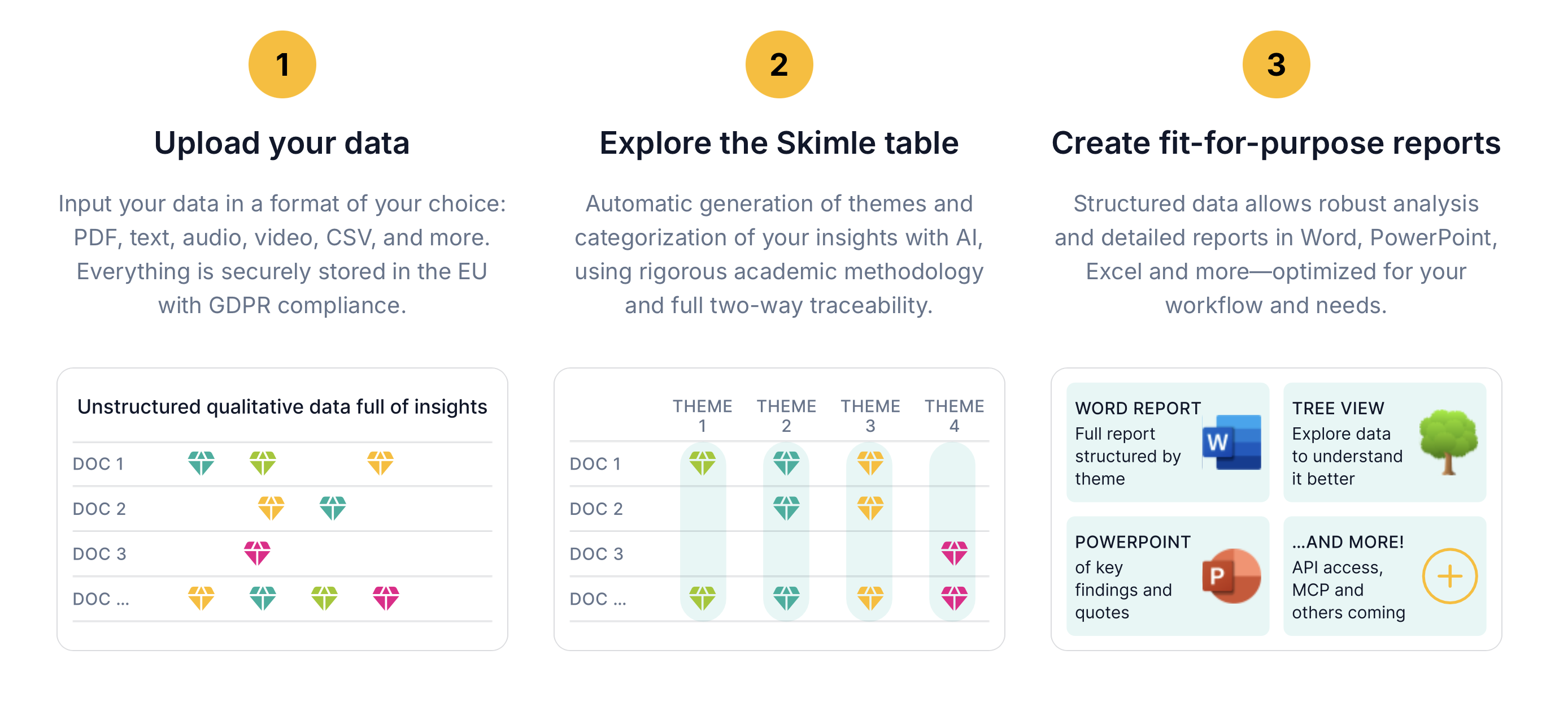

Skimle follows a systematic three-stage process inspired by academic thematic analysis but automated with AI.

Across the stages, the human expert has full transparency and control - be it to select insight types, trace individual insights back to verbatim quotes, change the categorisation of insights or select what type of exports to make. Skimle also enables collaboration across multiple experts from the same team or organisation.

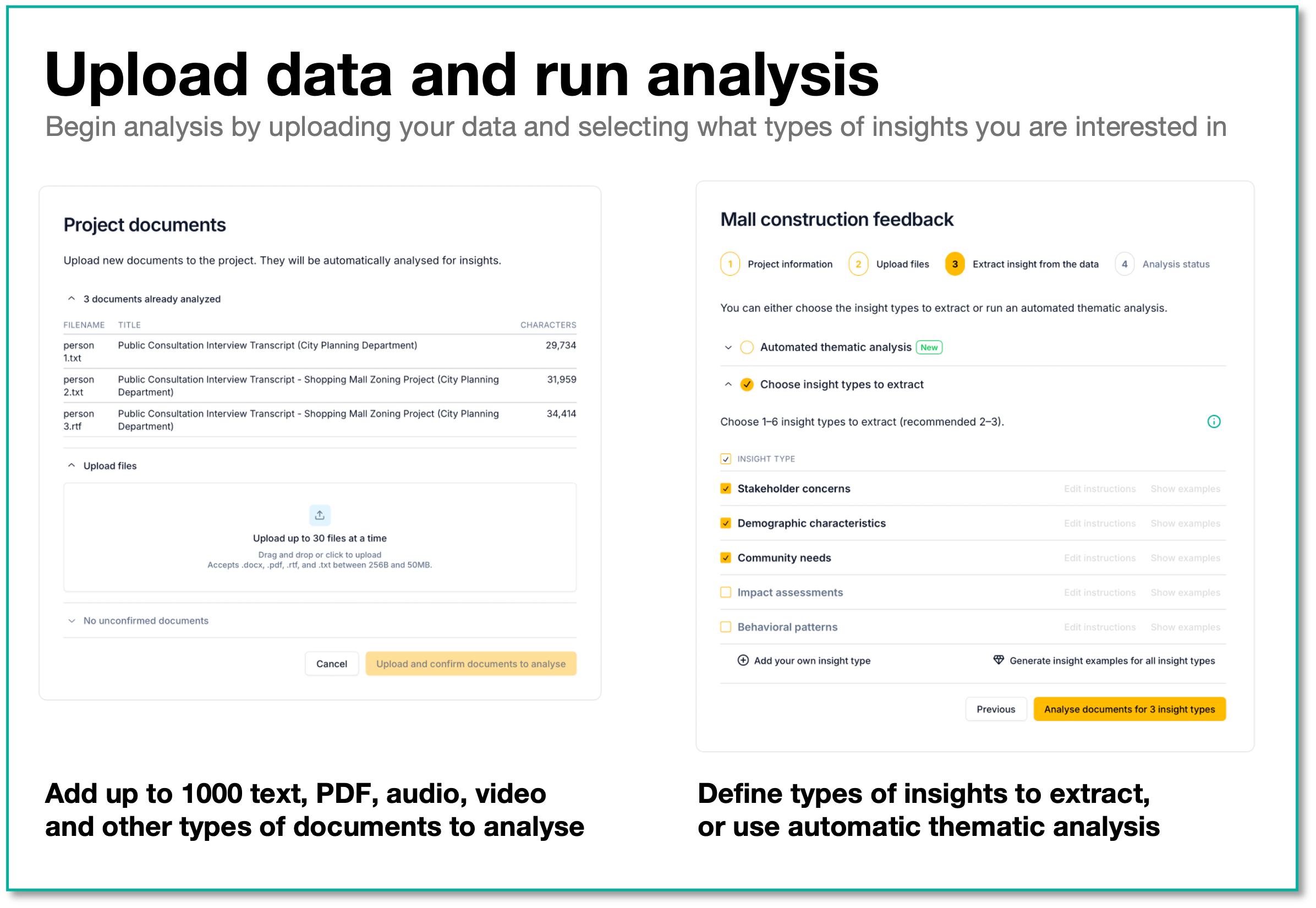

Stage 1: Upload & process

What you do:

- Upload documents in any format: PDF, Word, text, audio, video and beyond (more formats added based on user requests!)

- Specify what type of insights you want to extract (e.g., "risks," "customer complaints," "policy recommendations", "expressions of emotion"), or trust Skimle to identify this automatically (based on e.g., the types of questions asked, recurring themes and other signals)

Skimle processes the uploaded documents

- Transcribes audio/video with high-accuracy AI (if needed)

- Extracts text from PDFs, images, scanned documents

- Generates metadata automatically (date, source, speaker, organization, etc.)

- Begins systematic document-by-document analysis

The AI-assisted thematic analysis workflow is Skimle's greatest innovation. Where a classic "Chat with your documents" or RAG-based approach would just chunk and dump the documents into a vector database and query them at runtime, Skimle actually runs a systematic thematic analysis on the contents. Processing the data at entry to identify categories and structure the insights into a comprehensive Skimle table (what each document says about each category) allow exploring the data, maintaining full transparency, capturing all relevant insights and editing the analysis. Other AI approaches lead to different queries retrieving different passages and no way to know if the analysis is comprehensive.

Skimle's approach (structured thematic analysis):

- Reads each document systematically, section by section

- Uses hundreds of atomic LLM calls to understand meaning of each passage

- Identifies insights and iteratively codes them to categories

- Builds hierarchical category structure across all documents

The approach is patent-pending, with methodology validation to be soon published in a peer-reviewed journal (forthcoming).

The key difference:

- RAG / basic AI chatbots: "Search at query time" → inconsistent, superficial

- Skimle: "Analyze everything up front" → comprehensive, structured, stable

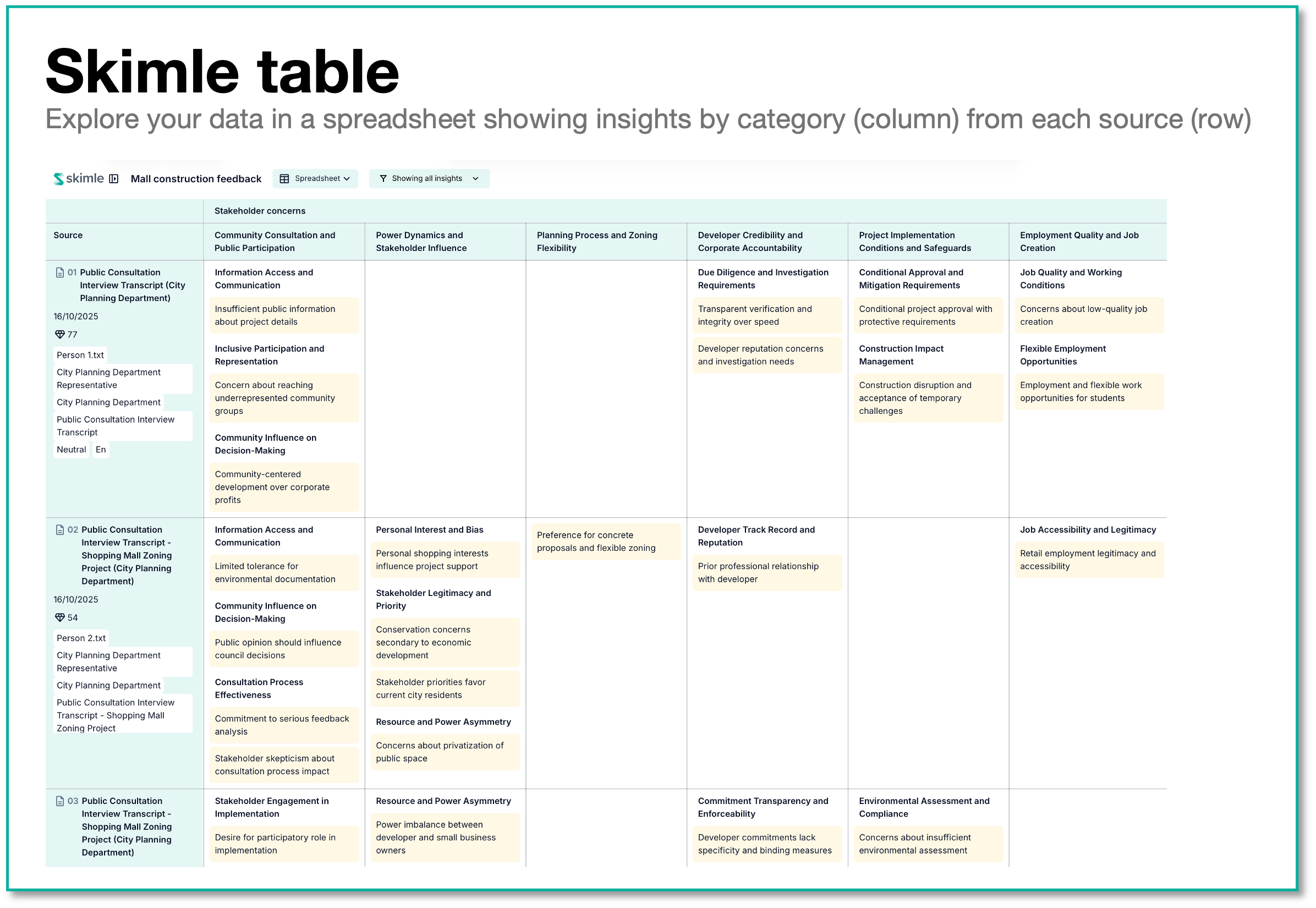

Stage 2: Explore and refine

The Skimle table is your primary workspace to explore data in

- Spreadsheet-like view: Documents as rows, themes as columns

- Each cell shows what that document says about that theme (one or more insights)

- Click any cell: see verbatim quotes + link to source document

- Filter by metadata, and add new metadata as needed (e.g., add field for "Has children or not" and Skimle will search each interview to identify answer)

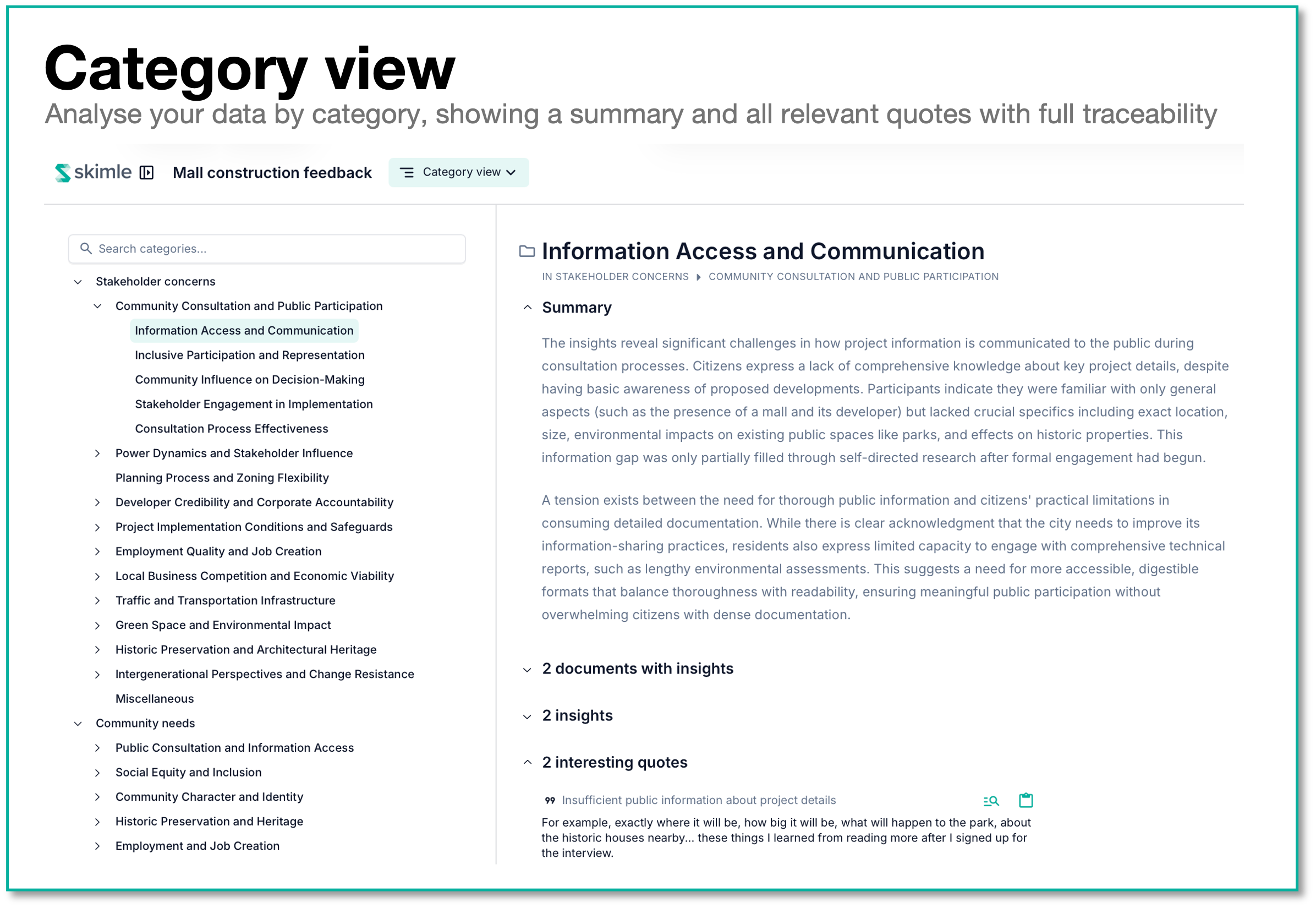

Category view to dig deeper into each theme:

- Hierarchical navigation of categories and sub-categories

- Full summary at each level

- Shows most insightful and typical quotes, as well as the full set of quotes related to the category

Document view allows reviewing original sources

- Raw text as inputted to the tool / captured from audio

- Skimle highlights every passage it coded

- Passages link to the categories they were coded as

- View full metadata of the document

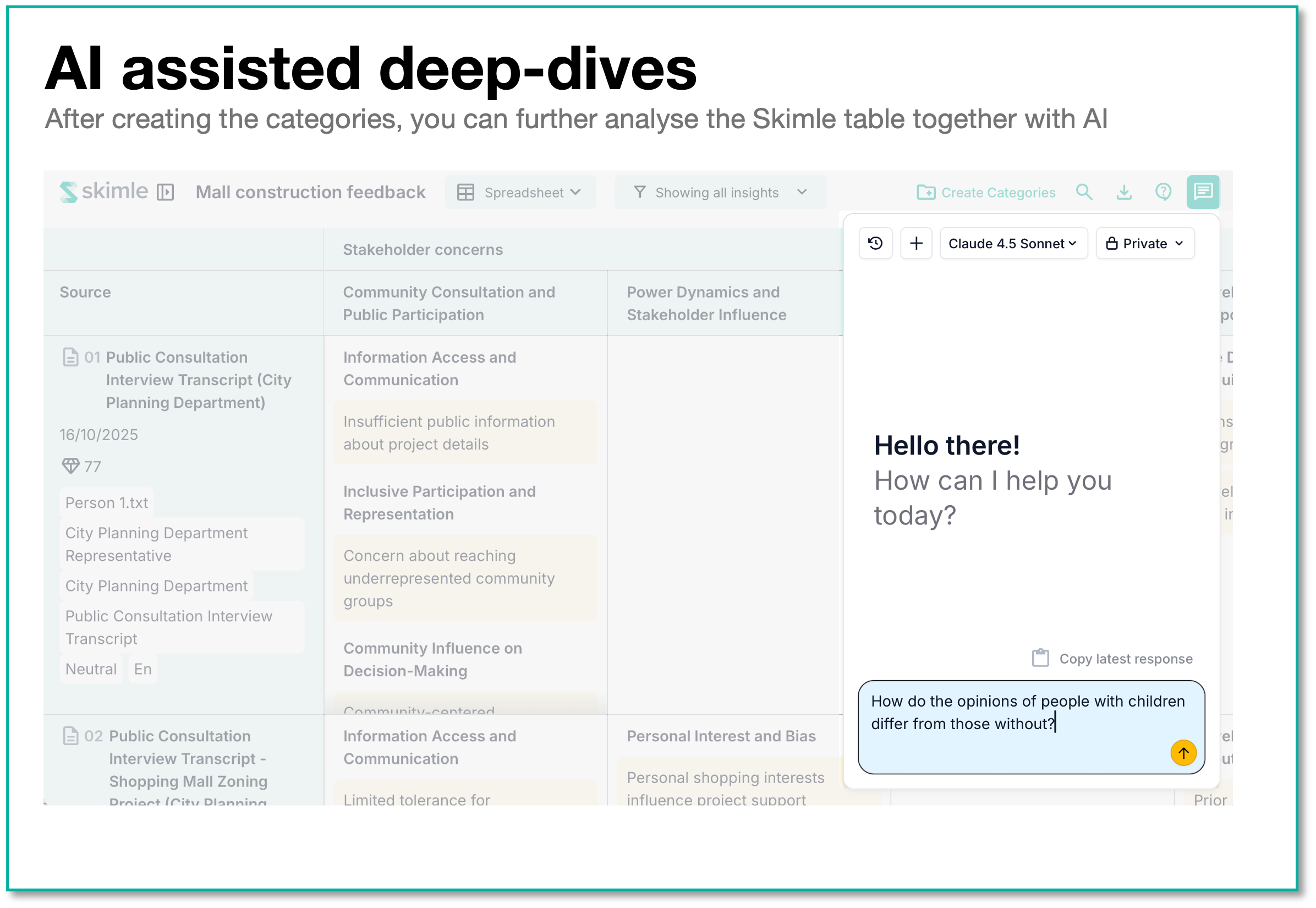

AI Chat with context:

- Ask: "How do answers differ between people with or without children"

- Skimle knows your categories AND metadata, gives structured answer with quotes

- Unlike ChatGPT: answers are stable and comprehensive (based on structured analysis, not retrieval each time)

- Choose the optimal language model based on specific requirements for your analysis (currently supports Claude Sonnet, Haiku, GPT-5, GPT-5 Mini, GPT-4.1)

This all flows together: Get the big picture with the Skimle table, then zoom into individual categories with Category View to read their summary. Follow any quote back to the Document view and use AI chat to further explore the data.

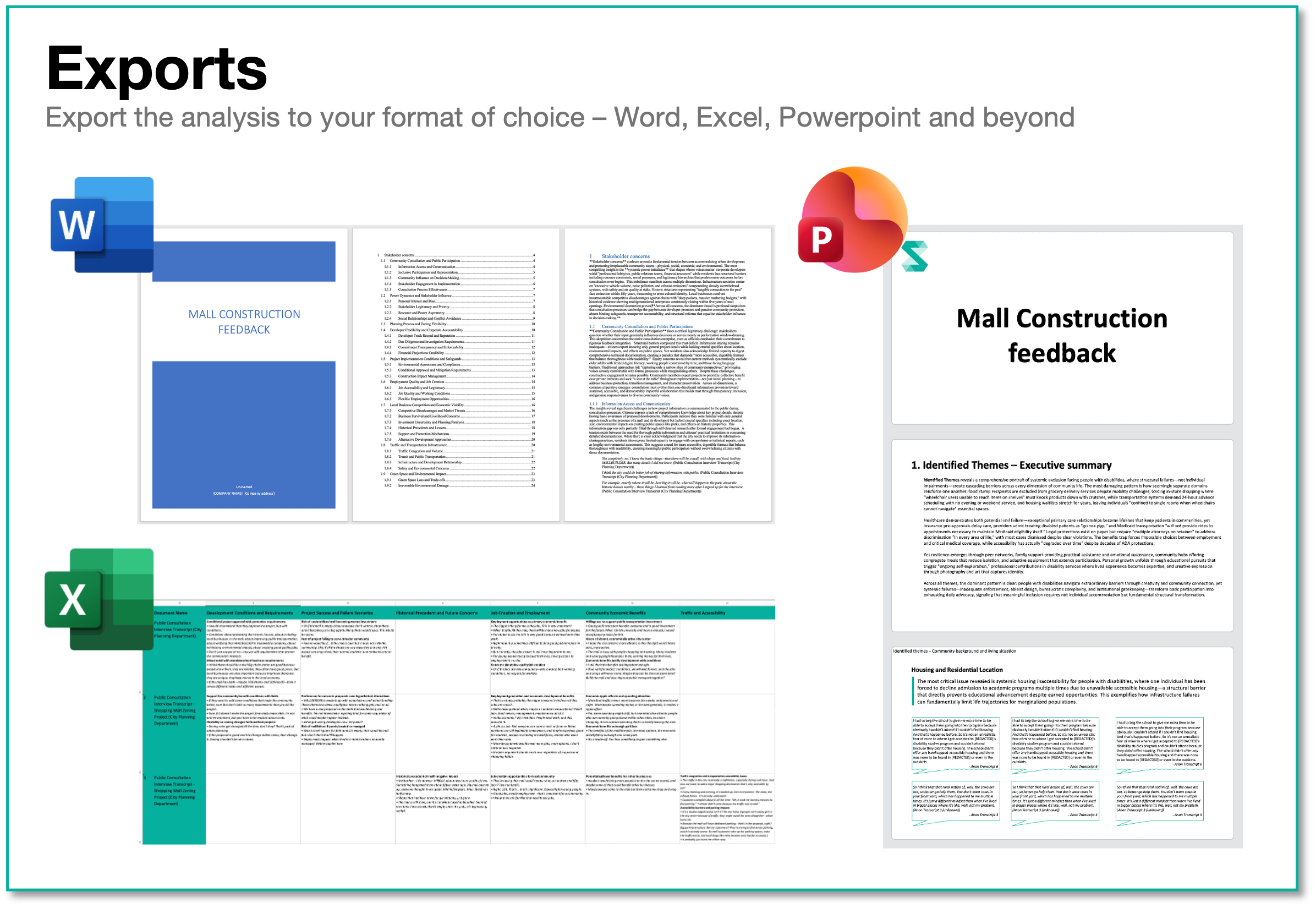

Stage 3: Export

After you have explored and refined the data, you can export it to your tool of choice. Individual users can choose from our ready export formats (Word, PowerPoint, Excel), and together with our organisational customers we are developing new export formats relevant for different workflows.

Word report:

- Table of contents to show full category hierarchy

- Executive summary for each section, category and sub-category

- All relevant quotes shown for each section

- Great starting point for your bespoke research report

- See example: EU Digital Omnibus feedback summary (.pdf conversion from Word export)

PowerPoint deck:

- Executive summary slide

- Key themes with evidence

- Deep-dive slides per theme with verbatim quotes

Excel export:

- Skimle table in spreadsheet format

- Allows you to pivot, filter, analyze in Excel

- Easy to share with stakeholders who don't use Skimle (yet!)

- See example: Criticism-category in EU Digital Omnibus feedback (Excel export)

We're constantly developing new export formats based on user feedback. Next in line is API/MCP access to enable agents to query structured knowledge. Integrating human-readable, structured data with custom workflows allows complex analyses that are impossible to do with basic RAG or unstructed context.

How does Skimle compare vs. other qualitative analysis methods?

If you are an expert needing to analyze and synthesise large sets of qualitative information, you have essentially four different approaches to choose from

| Ad Hoc | Rigorous | Ad Hoc + AI | Skimle | |

|---|---|---|---|---|

| Cursory analysis based on reading through materials | Identify categories and tag text manually or with NVivo, MAXQDA etc. | Feed documents directly or via RAG to an LLM | AI-assisted workflow combining academic rigour with speed | |

| Rigorous | − | ✓ | − | ✓ |

| Transparent | − | ✓ | − | ✓ |

| Fast | ✓ | − | ✓ | ✓ |

| Versatile | ✓ | − | − | ✓ |

| Time to analyse 40 interviews | 4hrs (skim only) | 40 hrs | 5 min (but unreliable) | 10 hours (full review) |

"Ad hoc" is when you glance through the reports to check for anything surprising, write a brief summary and maybe throw a word cloud to impress. There is nothing wrong with the approach and due to time constraints and cost pressures, many business professionals had to take this route before AI tools were available. As a result, qualitative analysis is often seen as a second class citizen compared to crunching the numbers.

"Rigorous" is what academic researchers and serious analysts do when facing large sets of qualitative data. It is based on using previous generation tools like NVivo, MAXQDA, ATLAS.ti or bespoke coding systems to manually go through each interview to create the transcript, analyze themes, categorize each quote and adjust the categories iteratively. This results into publishable quality research, but often takes months or even years for any sizeable dataset.

"Ad hoc + AI" is the new kid on the block, used by tools like NotebookLM, many domain specific workflow tools (e.g., Harvey, myjunior, Ailuyze and Dovetail) and in custom RAG (Retrieval Augmented Generation) workflows, and users who upload all the documents to the prompt of a standard tool like ChatGPT or Claude. The documents are either fed directly to a Large Language Model, or stored in a searchable vector database from which the right ones are selected for each query. This approach works nice in demos, but developers and users are increasingly seeing its limitations: Expert level insights depends on analysing and structuring the data, not just trying to search embeddings at runtime.

Why do simplistic one-shot and RAG based approaches fail? Think of them as having an analyst who has all their notes scattered in little post-it notes around the office. Even though they are great in finding the right notes depending on your question (for example, using an effective embedding and retrieval system), the answer you get always depends on which notes they happened to pull for your query. Ask it differently, and you get different answers from the black box. You can never know if answer was comprehensive or if something was hallucinated. In short: RAGs to riches doesn't work!

Skimle takes a completely different approach: analysing and structuring the documents on upload enables robust and stable analysis. Skimle uses atomic LLM calls to understand the meaning of each sentence and create relevant categories across documents. The editable and clear Skimle table allows humans and AI to make sense of the data and enables tailored transparent reports. By rigorously digesting the content up front, it is possible to identify common patterns, conflicting views and changes over time.

Note - what Skimle isn't:

- Not a replacement for understanding your data (you still need to review and interpret)

- Not a survey tool (use Qualtrics, SurveyMonkey or other tools for collection, Skimle for analysis)

- Not a magic "generate research paper" button (it's a power tool, not autopilot)

- Not for purely quantitative data (use Excel/SPSS/R for that)

Skimle is built for professionals, by professionals

Skimle is based in Finland and developed "by researchers for researchers" and "by business people for business people": our team combines decades of experience from conducting high quality manual thematic analysis from academia and consulting with expert software engineers and designers.

We are trusted by Finnish government ministries, over 20 different universities, dozens of consulting and market research firms and large companies. All our data is stored within the EU and processed according to our strict privacy and GDPR policy.

Keen to understand more?

Have a look at our Signal & Noise blog, our FAQ pages, different use cases by sector, and our YouTube channel for more in-depth information on how Skimle can be used in practice. We are also ready to demo our product or explore together how Skimle could fit your needs. Get in touch with us!